It is reasonable to say that the digital markets were the main protagonists of the last legislature when it comes to the European Commission’s efforts to offer regulatory frameworks for innovative products. It is also fair to affirm that those efforts have increased mainly the repercussions of the so-called “Brussels effect”, a term coined by the jurist Anu Bradford of Columbia University to designate the EU’s power to push other countries to align their regulation with the EU’s and non-EU multinationals to revise their business practices.

The EU delivered two groundbreaking pieces of legislation, the Digital Markets Act (DMA) and the Digital Services Act (DSA), with the Artificial Intelligence Act (AI Act) in its final stages. Lacking the latter’s final text (which is set to be published in the EU Official Journal on 12 July), we will focus our analysis on the impact of the DMA and DSA on the development of AI in the European Union and the challenges businesses in the sector will likely face soon.

Before going deeper into the analysis, it is paramount to review DMA and DSA’s main provisions quickly.

The Digital Markets Act includes:

1). Gatekeeper Designation:

Identifies large platforms as “gatekeepers” based on significant economic impact and market position.

2). Prohibitions on Self-Preferencing:

Gatekeepers cannot favor their own products over third-party products on their platforms.

3). Interoperability Requirements:

Ensures gatekeeper services are compatible with third-party software and services.

4). Data Portability:

Users can easily transfer data between platforms to reduce lock-in effects.

5). Restrictions on Data Combining:

Limits on merging personal data from different services to protect privacy.

6). Limitations on Pre-Installed Apps:

Restricts pre-installing apps and default settings to increase user choice.

7). Penalties:

Fines up to 10% of worldwide turnover for non-compliance and potential structural remedies.

On the other hand, the Digital Services Act’s main provisions include:

1). Transparency Requirements:

Platforms must disclose content moderation, algorithmic recommendations, and advertising practices.

2). Content Moderation:

Robust systems for handling illegal content, with additional obligations for very large platforms (VLOPs).

3). User Protection:

Prohibits manipulative design (dark patterns), protects minors, and allows opting out of profiling-based recommendations.

4). Reporting Mechanisms:

Easy-to-use tools for reporting illegal content and cooperation with trusted flaggers.

5). Targeted Advertising Restrictions:

Bans ads based on sensitive data and targeting minors, with transparency about ad targeting.

6). Risk Assessments for Large Platforms:

Annual risk assessments for VLOPs and very large online search engines (VLOSEs) to mitigate systemic risks.

7). Penalties:

Fines up to 6% of global turnover for non-compliance.

The Digital Services Act (DSA) and Digital Markets Act (DMA) are poised to significantly influence AI development in the European Union while presenting substantial compliance challenges for companies operating in this space.

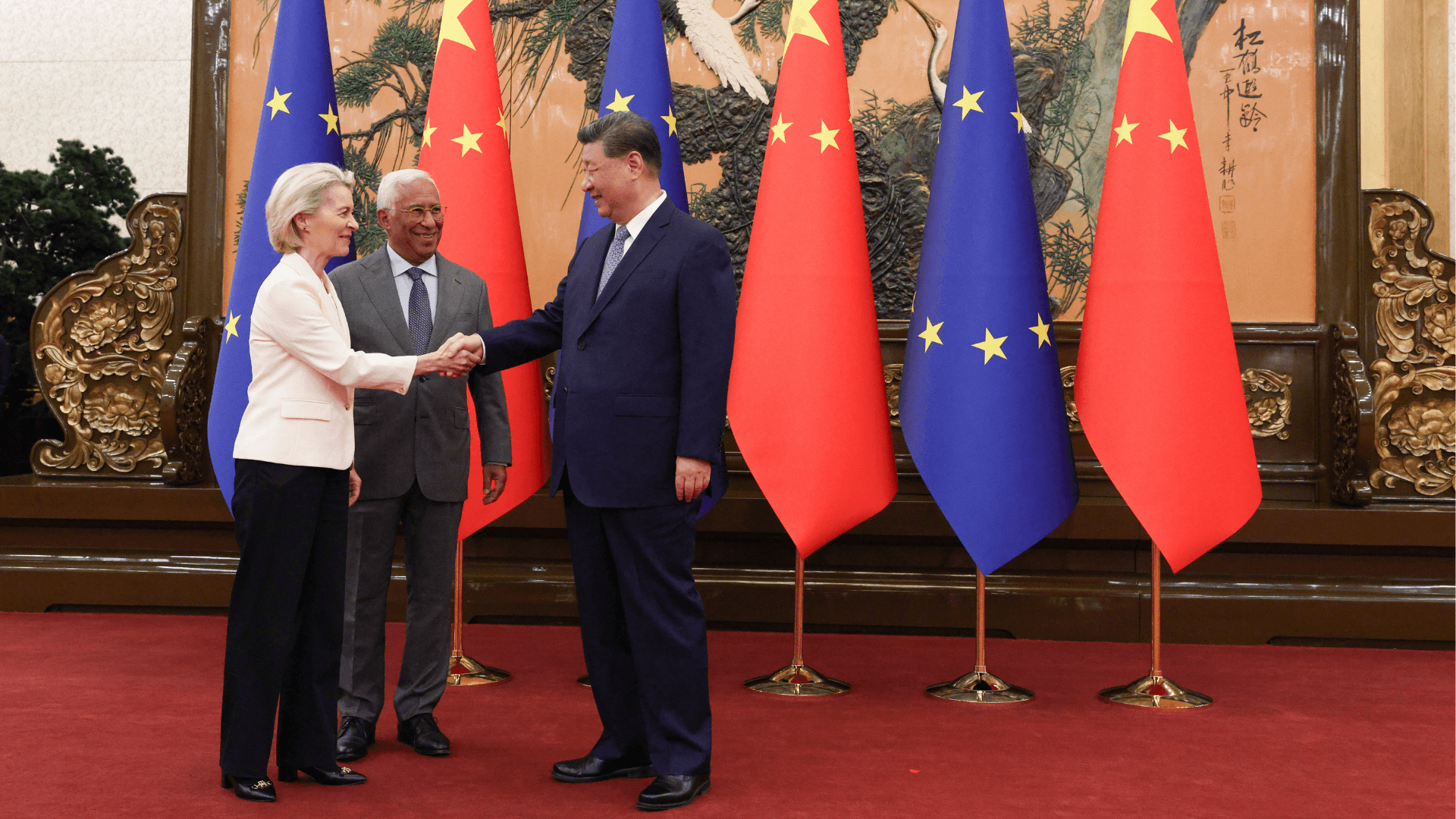

We got a sense of it this month with Apple: the European Commission said it believed Apple’s rules of engagement did not comply with the DMA “as they prevent app developers from freely steering consumers to alternative channels for offers and content.”

In addition, the Commission has opened a new non-compliance procedure against the Cupertino tech giant over concerns its new contract terms for third-party app developers also fall short of the DMA’s requirements.

It is the third non-compliance investigation opened by the commission into Apple since the DMA came into force last year and the sixth launched in total. Two other inquiries remain on Google, and one is on Meta, the owner of Facebook and Instagram.

From its end (or in response to the Commission’s investigations), Apple announced that they would delay launching three new artificial intelligence features in Europe because European Union competition rules require the company to ensure that rival products and services can function with its devices. The features will launch in the fall in the US but will not arrive in Europe until 2025. Specifically, Apple is concerned that the interoperability requirements of the DMA could force the company “to compromise the integrity of our products in ways that risk user privacy and data security”.

These regulations can potentially influence several aspects of new products outside their contingent digital domain, kick-starting further innovation and, consequently, trying to guide it through new regulation.

Artificial Intelligence is the most challenging (and, for your storyteller, exciting). Let’s break down each category DSA and DMA may have a significant impact.

Explainable AI

The requirement for algorithmic transparency could spur advancements in explainable AI, pushing researchers and developers to create models that users and regulators can more easily interpret and understand. This focus on openness might lead to new methodologies and techniques in AI development that prioritise clarity and accountability.

Safety practices

The emphasis on risk assessment, particularly for large platforms, may catalyse more robust AI safety practices and research. Companies will need to thoroughly evaluate the potential risks of their AI systems, which could lead to more comprehensive testing protocols and safety measures being integrated into the AI development process from the early stages.

Data access and portability provisions

Data access and portability provisions could have a dual effect on AI development. On the one hand, these rules might increase the availability of diverse datasets for AI training, potentially leading to more robust and less biased models. On the other hand, they raise new challenges around data protection and responsible data usage, requiring developers to be more cautious and innovative in acquiring and utilising training data.

Advertising

The targeted advertising restrictions may limit specific AI applications in advertising technology. This could shift the focus of AI development towards other use cases and applications, spurring innovation in new areas where AI can provide value without relying on personalised data to the same extent.

Compliance challenges for businesses

While DMA and DSA aim to foster competition and innovation, they also introduce new compliance costs and complexities. This could affect smaller AI companies or startups, who find meeting all the regulatory requirements more challenging. However, it could also create opportunities for companies that can efficiently navigate these new rules and provide compliant AI solutions.

Algorithmic transparency

One of the primary compliance challenges for businesses is achieving algorithmic transparency. Explaining complex AI systems in a way understandable to users and regulators is technically challenging, especially for advanced machine learning models like deep neural networks. Companies must invest in developing more interpretable AI models or creating robust explanation systems for their existing models.

Content moderation

Content moderation at scale presents another major challenge. It will be fundamental to balance automated AI moderation with human oversight while meeting rapid response requirements. This necessitates sophisticated AI systems that can accurately identify problematic content and efficient human review processes to handle edge cases and ensure accountability.

Data management

Under these regulations, data management becomes increasingly complex. Ensuring data portability while maintaining security and complying with data combination restrictions requires sophisticated data governance frameworks, meaning, granular data controls and potentially redesigning data architectures will be fundamental to meet these requirements.

Risk assessment

The obligation to conduct risk assessments and mitigate potential systemic risks of AI systems is significant, especially for large platforms. This requires companies (especially big actors) to develop comprehensive risk assessment methodologies and implement ongoing monitoring and mitigation strategies for their AI systems.

Interoperability requirements

Interoperability requirements present technical challenges in ensuring AI systems can work across different platforms while maintaining performance and security. This requires redesigning their AI architectures and APIs to be more flexible and compatible with third-party systems as we have already seen it is the case for Apple.

Users profiling

Adapting recommendation systems to comply with new rules on profiling and user choice may require significant changes to AI-driven algorithms. Companies must find ways to provide personalised experiences while respecting user preferences and regulatory restrictions on data usage.

Ensuring AI systems don’t use prohibited data categories for ad targeting while maintaining effectiveness is a complex task that may require innovative approaches to privacy-preserving machine learning and differential privacy.

The fast-evolving nature of AI technology means actors must continuously update their compliance strategies. This demands ongoing vigilance and agility in regulatory compliance, potentially necessitating dedicated teams to monitor regulatory developments and adapt AI systems accordingly.

For companies operating across multiple EU countries, ensuring consistent compliance while navigating potential regulatory differences adds another layer of complexity.

Finally, ça va sans dire that implementing systems to track and report on AI performance and its impact on regulatory audits can be resource-intensive.

Our key takeaway

The Digital Markets Act (DMA) and Digital Services Act (DSA) represent significant regulatory efforts by the European Union to address challenges in the digital marketplace and promote fair competition, transparency, and user protection. These regulations are already substantially impacting major tech companies and their AI development practices, as evidenced by recent investigations into Apple and other tech giants.

While these regulations aim to foster innovation and protect consumers, they also present considerable compliance challenges for businesses, particularly in AI development. Companies must now navigate complex requirements related to algorithmic transparency, content moderation, data management, risk assessment, and interoperability.

In summary, the DMA and DSA mark a significant shift in the regulatory landscape for digital services and AI in Europe, with far-reaching implications for global tech companies. As these regulations continue to be implemented and enforced, they will likely have a lasting impact on the development and deployment of AI technologies, potentially setting new global standards for AI governance and digital market regulation.